Deep Learning: Computer Vision

Content

- Content

- Short Summary of CNN

- CNN Convolution On RGB Images

- Backpropagation In Convolutional Neural Networks

- VGG Model

- Best deep CNN architectures and their principles: from AlexNet to EfficientNet

- How does CNN work ? Explain the implementation details?

- Explain the back propagation steps of Convolution Neural Network?

- Linear Classification

- Linear Classification - Loss Function, Optimization

- Siamese Network

- Object Detection

- What is RCNN?

- What is SPP-net?

- What is Fast-RCNN?

- Object detection with RCNN

- List of Object Detection Algorithms and Repositories

- What is YOLO?

- Exercise:

Short Summary of CNN

- The interest in CNN started with AlexNet in 2012 and it has grown exponentially ever since.

- The main advantage of CNN compared to its predecessors is that it automatically detects the important features without any human supervision.

- CNN is also computationally efficient. It uses special convolution and pooling operations and performs parameter sharing. This enables CNN models to run on any device, making them universally attractive.

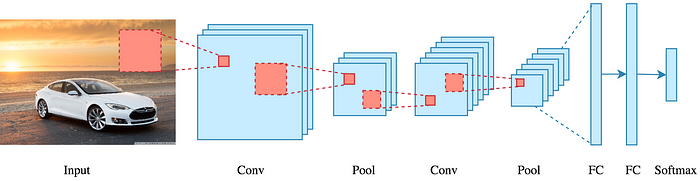

Architecture

- All CNN models follow a similar architecture, as shown in the figure below.

Convolution

- We perform a series

convolution+pooling operations, followed by a number of fully connected layers.

- The green area where the convolution operation takes place is called the

receptive field.

- NOTE: We perform multiple convolutions on an input, each using a different filter and resulting in a distinct feature map. We then stack all these feature maps together and that becomes the final output of the convolution layer.

Non-Linearity

- We again pass the result of the convolution operation through

reluorLeaky-Reluactivation function. So the values in the final feature maps are not actually the sums, but the relu function applied to them.

Stride and Padding

- Stride specifies how much we move the convolution filter at each step. By default the value is 1

- We see that the size of the feature map is smaller than the input, because the convolution filter needs to be contained in the input. If we want to maintain the same dimensionality, we can use padding to surround the input with zeros.

- The gray area around the input is the padding. We either pad with zeros or the values on the edge.

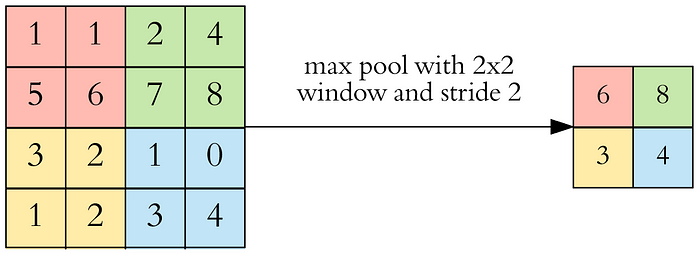

Pooling

- After a convolution operation we usually perform pooling to

reduce the dimensionality. This enables us to reduce the number of parameters, which both shortens the training time and combats overfitting. Pooling layers downsample each feature map independently, reducing the height and width, keeping the depth intact.

- In CNN architectures, pooling is typically performed with 2x2 windows, stride 2 and no padding. While convolution is done with 3x3 windows, stride 1 and with padding.

Hyperparameters

- Filter size: we typically use

3x3filters, but5x5or7x7are also used depending on the application. - Filter count: this is the most variable parameter, it’s a power of two anywhere between 32 and 1024. Using more filters results in a more powerful model, but we risk overfitting due to increased parameter count.

-

Stride: we keep it at the default value 1. -

Padding: we usually use padding.

Fully Connected

- After the convolution + pooling layers we add a couple of fully connected layers to wrap up the CNN architecture.

- Remember that the output of both convolution and pooling layers are 3D volumes, but a fully connected layer expects a 1D vector of numbers. So we flatten the output of the final pooling layer to a vector and that becomes the input to the fully connected layer.

Training:

- You do not fix the filter coefficients, rather you learn them over several iterations of training. The initialization can be random, or can be based on pre-trained model weights (such as those from the ‘modelzoo’ in github repos for popular models such as Alexnet, VGG, etc)

- Once you decide the filter size, we randomly initialize the weight of the filter and allow back propagation algorithm to learn weights automatically.

CNN Dimension Analysis:

The input dimensions are as follows

- $W_I = 227$

- $H_I = 227$

- $D_I = 3$

- The filter is of scale $F = 11$, i.e

11x11x3, where 3 is the same depth as $D_I$ . - We apply $96$ Filter operations, so therefore $K = 96$

- We do not take any padding ($P=0$)

- We choose a stride length of $S = 4$

- Thus, going by the above information, the output volume can be calculated as follows:

- $W_O = \frac{W_I - F + 2P}{S}+1=55$

- $H_O = \frac{H_I - F + 2P}{S}+1=55$

- $D_O=K=96$

- Thus, the output of the convolutional layer has the dimensions

55x55x96.

Differences between Fully-connected DNNs and CNNs

- Sparse Connectivity

- Weight Sharing

How to understand the LeNet architecture?

Remember:

- Say I have an image size

64x64x3and filter size is4x4. When I willconvolvethis filter over the image, the filter will be applied on all the3channels of the image simultaneously, i.e across the depth of the image (in general volume). So image64x64x3and a filter4x4x3(i.e the initial filter4x4is expanded to the depth and becomes4x4x3) is applied and a scalar value is obtained after all the matrix element wise multiplication and sum. Next we will stride i.e convolve across the height and width of the image (but not across the depth as the filter depth and image depth are same) and get the next scalar value. So after the convolution I will get a set of scalar values i.e neurons arranged over a 2D plane.- So, a 3D filter when applied on 3D volume (when both depths are same) gives a 2D plane of neurons i.e scalar.

- So if K such filters are used there will be K such 2D planes

- Next we apply non-linearity over those values by applying some activation function

- But when applying max-pooling, the max-pooing matrix will not be expanded across the depth and will be applied independently on the all the 2D planes (stacked together) obtained after the convolution followed by activation. So after the max-pooling the depth of the volume will be same as the depth of the last convolution step. [check Lecture 14, of Convolution Neural Network, PadhAI, in guvi.in]

References:

CNN Convolution On RGB Images

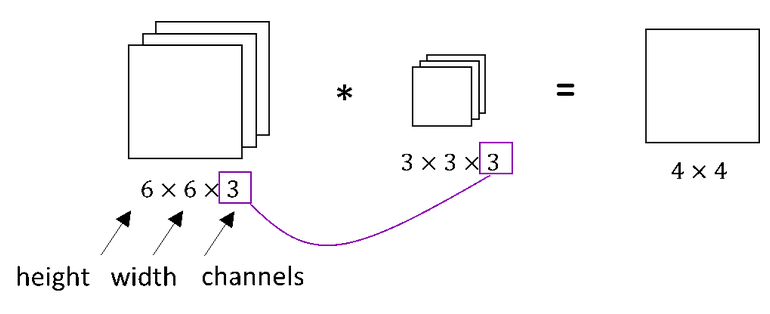

For example, if we want to detect features, not just in a grayscale image, but in an RGB image.

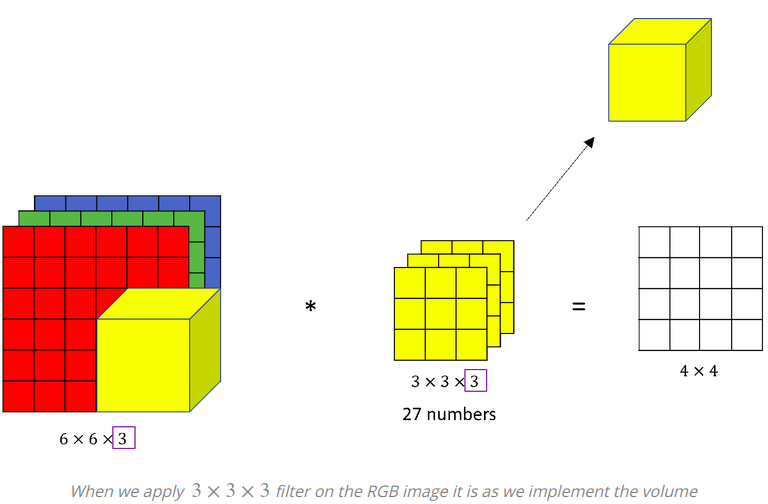

Let’s name them: this first $6$ here is the height of the image, the second 6 is the width, and the 3 is the number of channels. Similarly, our filter also have a height, width and the number of channels. Number of channels in our image must match the number of channels in our filter, so these two numbers have to be equal. The output of this will be a 4×4 image, and notice this is 4×4×1, there’s no longer 3 at the end. Look at the image below.

Here we can see the 6×6×3 image and the 3×3×3 filter. The last number is the number of channels and it matches between the image and the filter. To simplify the drawing the 3×3×3 filter, we can draw it as a stack of three matrices. Sometimes, the filter is drawn as a three-dimensional cube as we can see in the image below.

![]() How does the computation work?

How does the computation work?

To compute the output of this convolution operation, we take the 3×3×3 filter and first place it in that most upper left position. Notice that 3×3×3 filter has 27 numbers. We take each of these 27 numbers and multiply them with the corresponding numbers from the red, green and blue channel. So, take the first nine numbers from red channel, then the three beneath it for the green channel, then three beneath it from the blue channel and multiply them with the corresponding 27 numbers covered by this yellow cube. Then, we add up all those numbers and this gives us the first number in the output. To compute the next output we take this cube and slide it over by one. Again we do the twenty-seven element wise multiplications and sum up 27 numbers and that gives us the next output.

By convention, in computer vision when you have an input with a certain height and width, and a number of channels, then your filter can have a different height and width, but number of channels will be the same. Again, notice that convolving a 6×6×3 volume with a 3×3×3 gives a 4×4 , a 2D output.

![]() Knowing how to convolve on volumes is crucial for building convolutional neural networks. New question is, what if we want to detect vertical edges and horizontal edges and maybe even 45° or 70° as well. In other words, what if we want to use multiple filters at the same time?

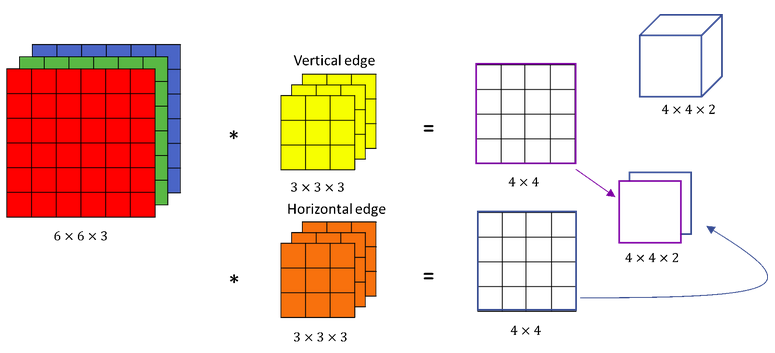

Knowing how to convolve on volumes is crucial for building convolutional neural networks. New question is, what if we want to detect vertical edges and horizontal edges and maybe even 45° or 70° as well. In other words, what if we want to use multiple filters at the same time?

Different filters are used for different feature extraction . e.g: one filter for vertical edge, one filter for horizontanl edge. Let’s see an example where the filter’s job is to identify “eye” in the image.

Look at the convolution output, where it finds “eye”, output non-zero value, remaining zeros only.

Effect of filters on original image

We can add a new second filter denoted by orange color, which could be a horizontal edge detector. Convolving an image with the filters gives us different 4×4 outputs. These two 4×4 outputs, can be stacked together obtaining a 4×4×2 output volume. The volume can be drawn this as a box of a 4×4×2 volume, where 2 denotes the fact that we used two different filters.

How one volume is converted into different volume after convolution?

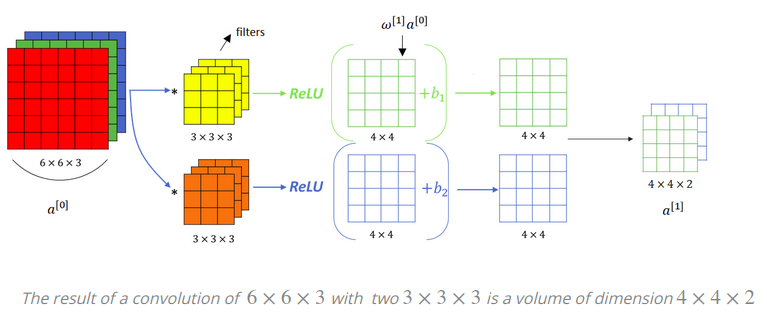

Convolving with the first filter gives us one 4×4 image output, and convolving with the second filter gives a different 4×4 output. To turn this into a convolutional neural network layer we need to add bias which is a scalar.

- Python broadcasting provides that bias is added to every element in that 4×4 output, or to all these sixteen elements. Then we will apply activation function, for example

𝑅𝑒𝐿𝑈()activation function. The same we will do with the output we got by applying the second 3×3×3 filter (kernel). So, once again we will add a different bias and then we will apply a 𝑅𝑒𝐿𝑈 activation function. After adding a bias and after applying a 𝑅𝑒𝐿𝑈 activation function dimensions of outputs remain the same, so we have two 4×4 matrices.

Next, we will repeat previous steps. Then we stack up with a 4×4×2 output. This computation have gone from 6×6×3 to a 4×4×2 and it represents one layer of a convolutional neural network.

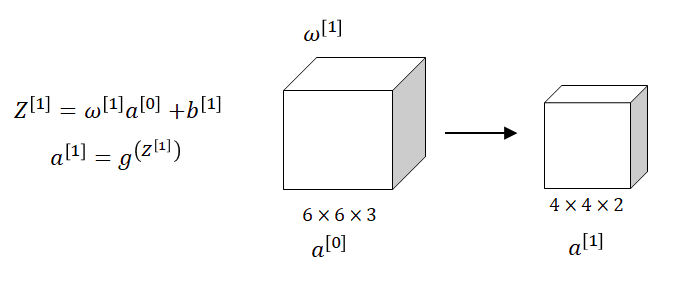

In neural networks one step of a forward propagation step was: $𝑍^{[1]}=𝑊^{[1]}×𝑎^{[0]}+𝑏^{[1]}$, where $𝑎^{[0]}=𝑥$. Then we applied the non-linearity (e.g. $ReLu()$) to get $𝑎^{[1]}=𝑔^{𝑍[𝑙]}$. The same idea we will apply in a layer of the Convolutional Neural Network.

*In case the above link is broken, click here and listen from $42:30$ minutes

Reference:

-

Part 1 - How do we make convolutions on RGB images?

- Part 2 - How do we make convolutions on RGB images?

-

Chapter - 4: Learn TensorFlow and deep learning, without a Ph.D.

-

Stackoverflow

Backpropagation In Convolutional Neural Networks

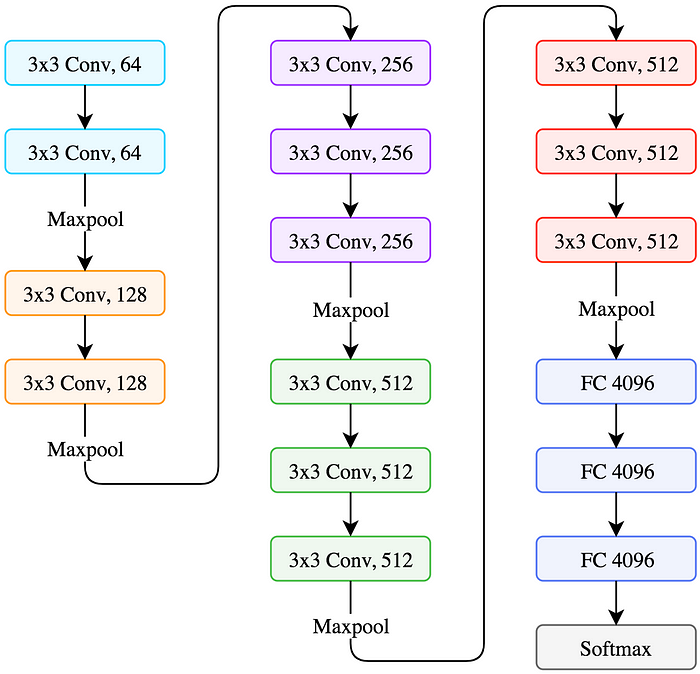

VGG Model

Let’s now take a look at an example state-of-the art CNN model from 2014. VGG is a convolutional neural network from researchers at Oxford’s Visual Geometry Group, hence the name VGG. It was the runner up of the ImageNet classification challenge with 7.3% error rate.

Among the best performing CNN models, VGG is remarkable for its simplicity. Let’s take a look at its architecture.

- It only uses 3x3 convolutions throughout the network.

-

NOTE: The two back to back

3x3convolutions have the effective receptive field of asingle 5x5convolution. And three stacked3x3convolutions have the receptive field of a single 7x7 one. Here’s the visualization of two stacked 3x3 convolutions resulting in 5x5.

-

Does that mean, if

n3x3filters are used back to back that is equivalent to applying 1 filter of size2n+1 -

Another advantage of stacking two convolutions instead of one is that we use two relu operations, and more non-linearity gives more power to the model.

-

The number of filters increase as we go deeper into the network. The spatial size of the feature maps decrease since we do pooling, but the depth of the volumes increase as we use more filters.

How to extract the features and feed to other model?

In the above VGG diagram the last 3 gree boxes are the Fully Connected (FC) layers and they are doing the classification. And before that FC layers, everything else are doing feature selection.

Now if you pay attention to the last (from left to right) red box (after the max pooling, size: 7x7x512), that encodes all the features for that image.

Now you can take that out, flatten it as a 1D vector and can pass it to other architecture.

Where is this useful?

Say you are working on Image to Caption generation task. There task is to generate caption given image and previous captions. So in this Encoder-Decoder model, instead of feeding the ras image (pixels value as vector), we can first pass the Image through VGG16 and then extract that red box (refer above image), flatten it and pass that (encoded features of image) to the encoder model.

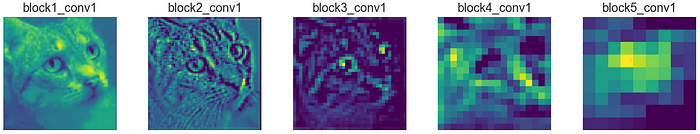

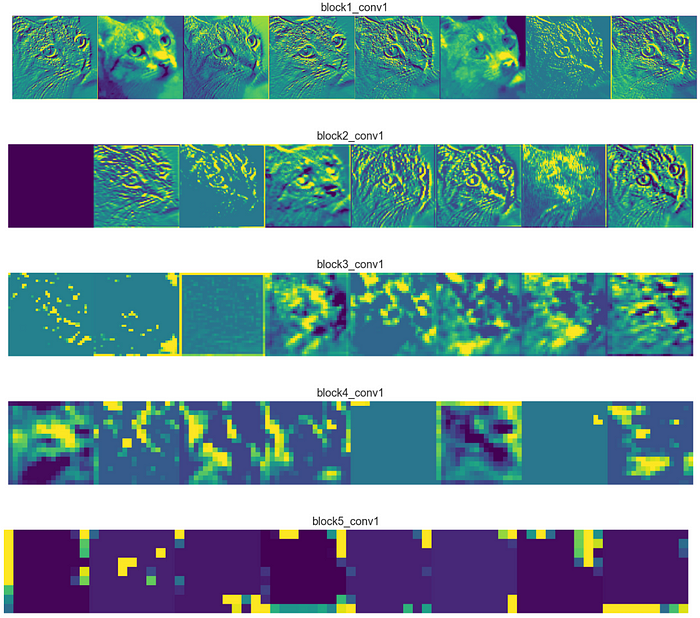

Visualizing Feature Maps

- VGG convolutional layers are named as follows: blockX_convY. For example the second filter in the third convolution block is called block3_conv2.

As we go deeper into the network, the feature maps look less like the original image and more like an abstract representation of it. As you can see in block3_conv1 the cat is somewhat visible, but after that it becomes unrecognizable. The reason is that deeper feature maps encode high level concepts like “cat nose” or “dog ear” while lower level feature maps detect simple edges and shapes. That’s why deeper feature maps contain less information about the image and more about the class of the image. They still encode useful features, but they are less visually interpretable by us.

The feature maps become sparser as we go deeper, meaning the filters detect less features. It makes sense because the filters in the first layers detect simple shapes, and every image contains those. But as we go deeper we start looking for more complex stuff like “dog tail” and they don’t appear in every image. That’s why in the first figure with 8 filters per layer, we see more of the feature maps as blank as we go deeper (block4_conv1 and block5_conv1)

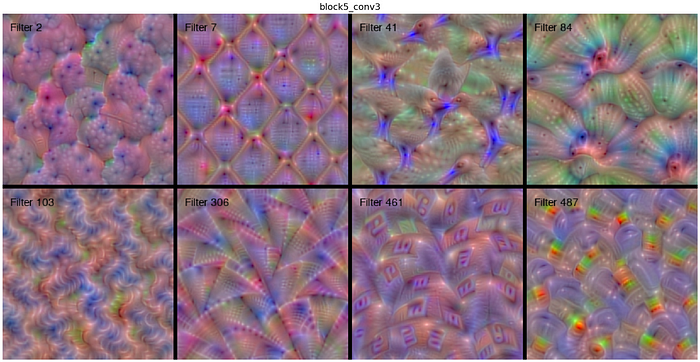

- Remember that each filter acts as a detector for a particular feature. The input image we generate will contain a lot of these features.

Filter and Featuremap Visualization

As we go deeper into the network, the filters build on top of each other, and learn to encode more complex patterns. For example filter 41 in block5_conv3 seems to be a bird detector. You can see multiple heads in different orientations, because the particular location of the bird in the image is not important, as long as it appears somewhere the filter will activate. That’s why the filter tries to detect the bird head in several positions by encoding it in multiple locations in the filter.

Reference:

Best deep CNN architectures and their principles: from AlexNet to EfficientNet

How does CNN work ? Explain the implementation details?

Explain the back propagation steps of Convolution Neural Network?

- Andrej Karpathy YouTube

- Andrej Karpathy Medium

- summary short

- MUST READ: how to compute backprop in program,

Linear Classification

-

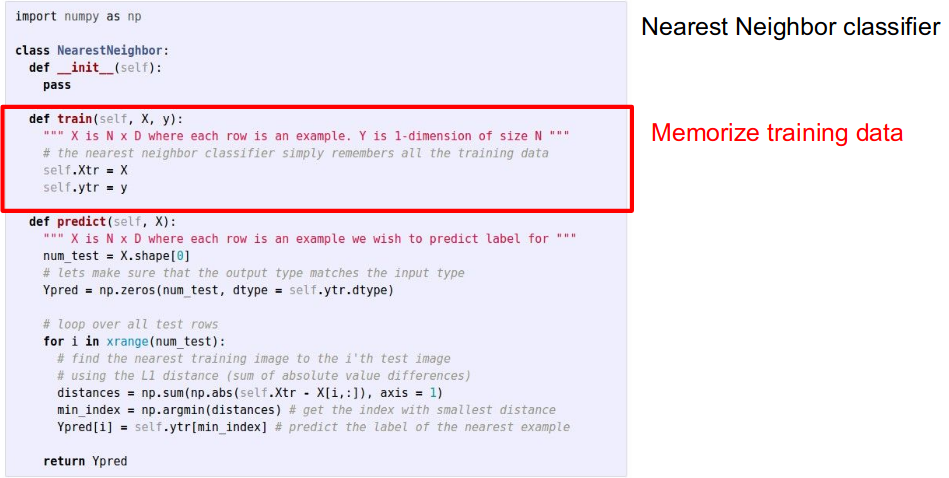

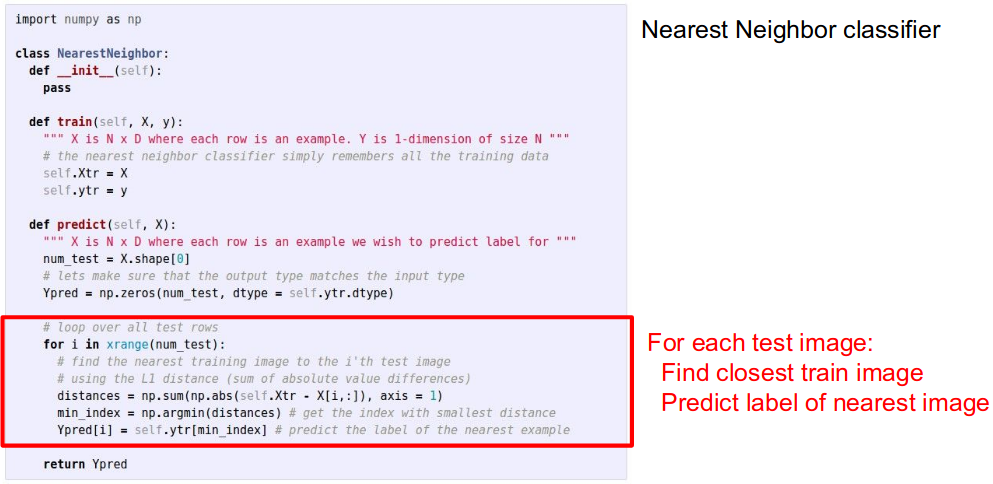

Q: With N examples, how fast are training and prediction?

-

Ans:

- Train O(1),

- Predict O(N)

- This is bad: we want classifiers that are fastat prediction; slow for training is ok

-

Ans:

Reference:

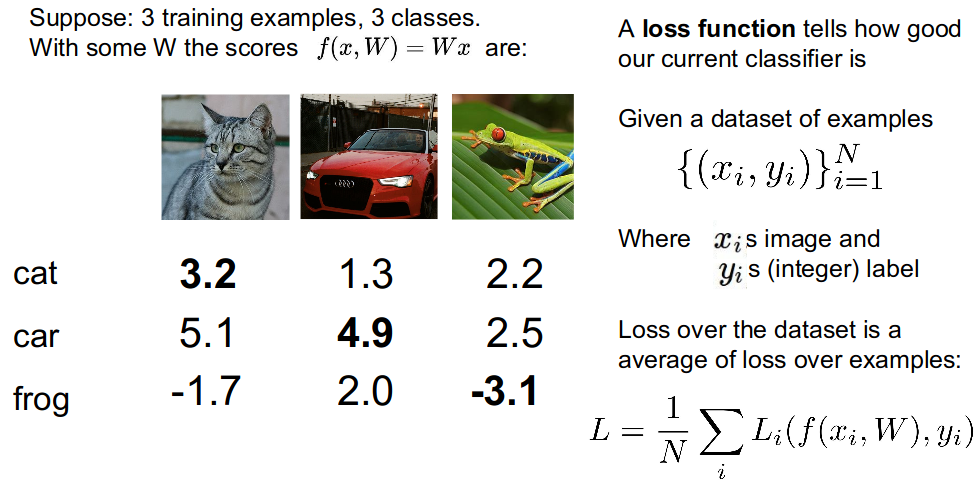

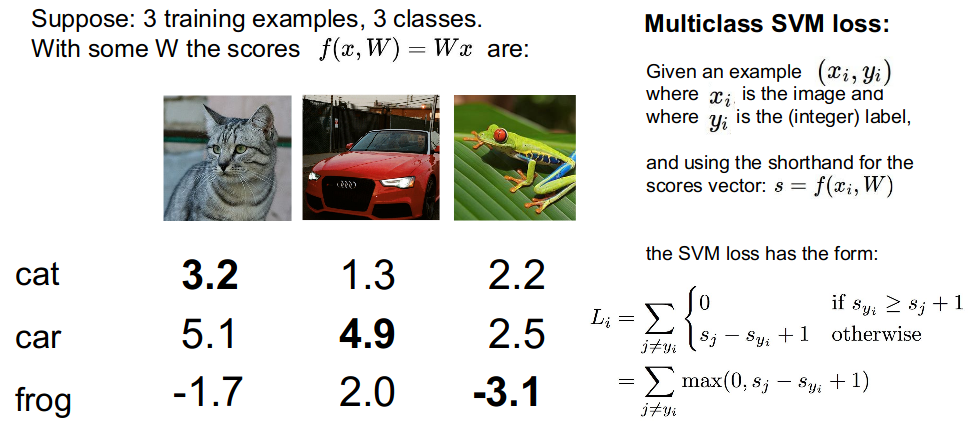

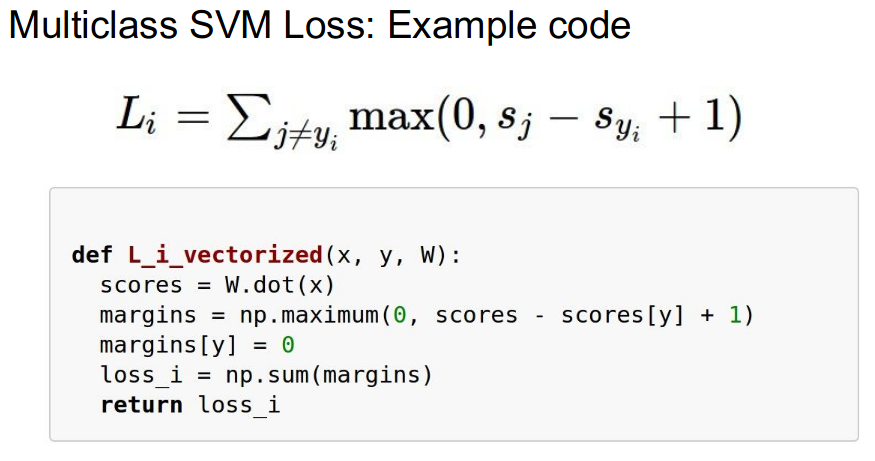

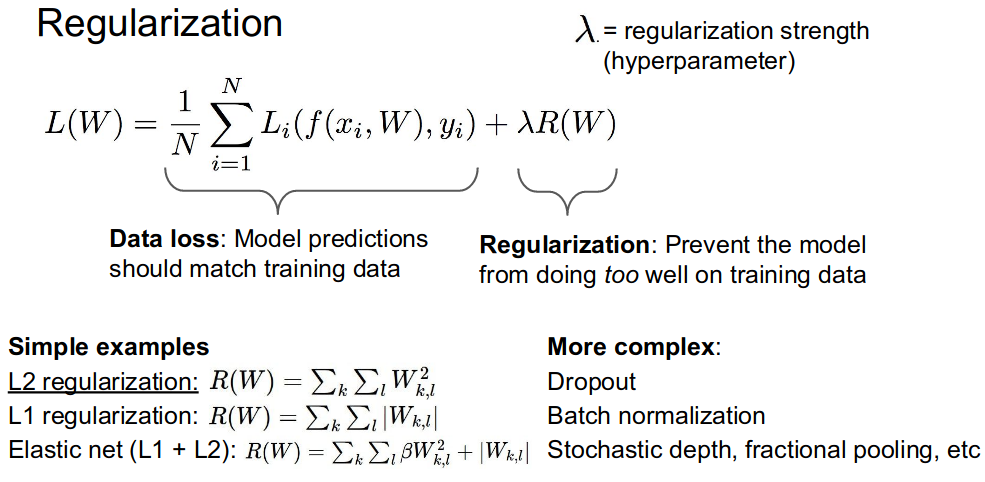

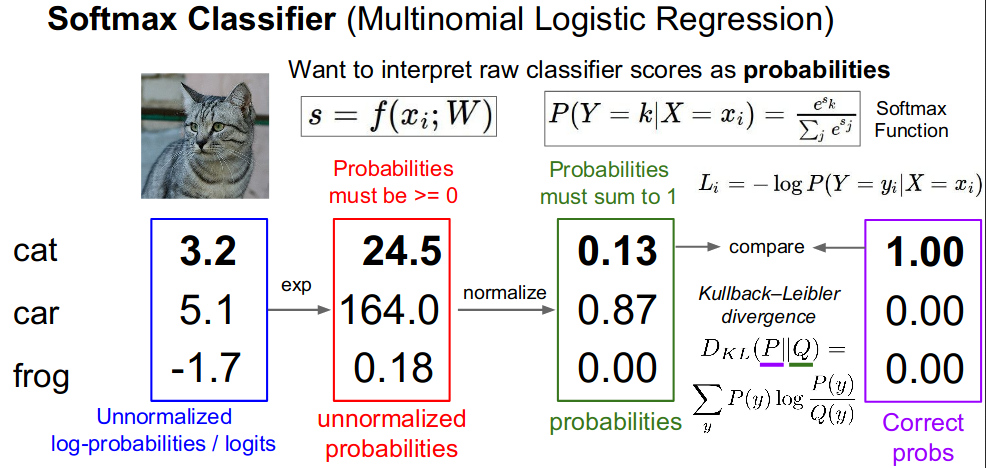

Linear Classification - Loss Function, Optimization

- Suppose that we found a $W$ such that $L = 0$. Is this $W$ unique?

- NO. $2W$ also has $L = 0$

Why regularize?

- Express preferences over weights

- Make the model simple so it works on test data

- Improve optimization by adding curvature

- Q1: What is the

min/maxpossible loss $L_i$?- A: min $0$, max $\infin$

- Q2: At initialization all s (score) will be approximately equal; what is the loss?

- A: $\log(\text{number Of Class})$, e.g $\log(10)$ ≈ $2.3$

Reference:

Siamese Network

Siamese networks are a special type of neural network architecture. Instead of a model learning to classify its inputs, the neural networks learns to differentiate between two inputs. It learns the similarity between them.

A twin neural network (sometimes called a Siamese Network, though this term is frowned upon) is an artificial neural network that uses the same weights while working in tandem (having two things arranged one in front of the other) on two different input vectors to compute comparable output vectors. Often one of the output vectors is precomputed, thus forming a baseline against which the other output vector is compared. This is similar to comparing fingerprints but can be described more technically as a distance function for locality-sensitive hashing.

It is possible to make a kind of structure that is functional similar to a siamese network, but implements a slightly different function. This is typically used for comparing similar instances in different type sets.

Uses of similarity measures where a twin network might be used are such things as

- Recognizing handwritten checks

- Automatic detection of faces in camera images

- Matching queries with indexed documents.

Learning

Learning in twin networks can be done with triplet loss or contrastive loss.

Triplet Loss

Triplet loss is a loss function for artificial neural networks where a baseline (anchor) input is compared to a positive (truthy) input and a negative (falsy) input. The distance from the baseline (anchor) input to the positive (truthy) input is minimized, and the distance from the baseline (anchor) input to the negative (falsy) input is maximized. wiki

- minimize distance(baseline,truth)

- maximize distance(baseline,false)

The negative (false) vector will force learning in the network, while the positive vector (truth) will act like a regularizer.

Predefined metrics, Euclidean distance metric

The common learning goal is to minimize a distance metric for similar objects and maximize for distinct ones. This gives a loss function like

Twin Networks for Object Tracking

Twin networks have been used in object tracking because of its unique two tandem inputs and similarity measurement. In object tracking, one input of the twin network is user pre-selected exemplar image, the other input is a larger search image, which twin network’s job is to locate exemplar inside of search image. By measuring the similarity between exemplar and each part of the search image, a map of similarity score can be given by the twin network.

Furthermore, using a Fully Convolutional Network, the process of computing each sector’s similarity score can be replaced with only one cross correlation layer.

![]() Reference:

Reference:

- wiki: Siamese network

- wiki: triplet loss

- blog1

- blog:Siamese Networks: Algorithm, Applications And PyTorch Implementation

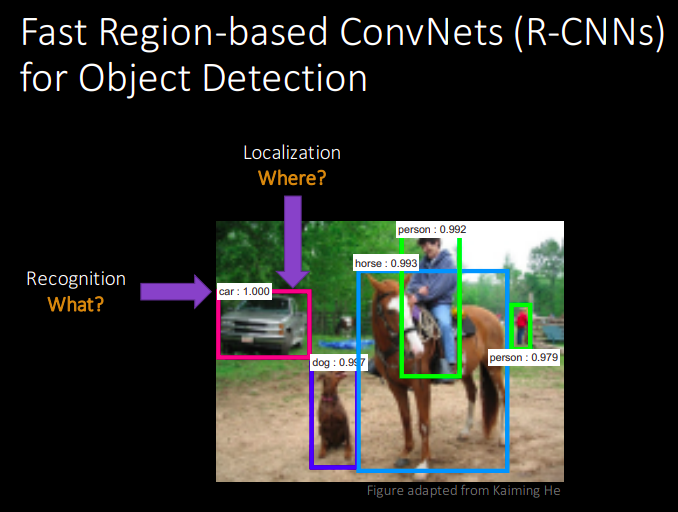

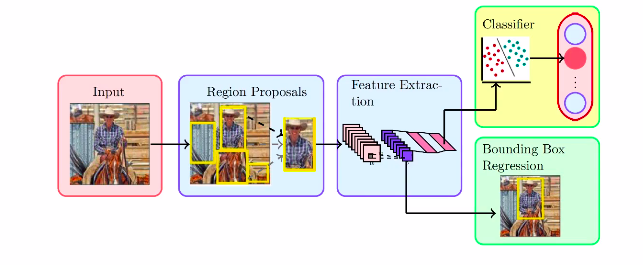

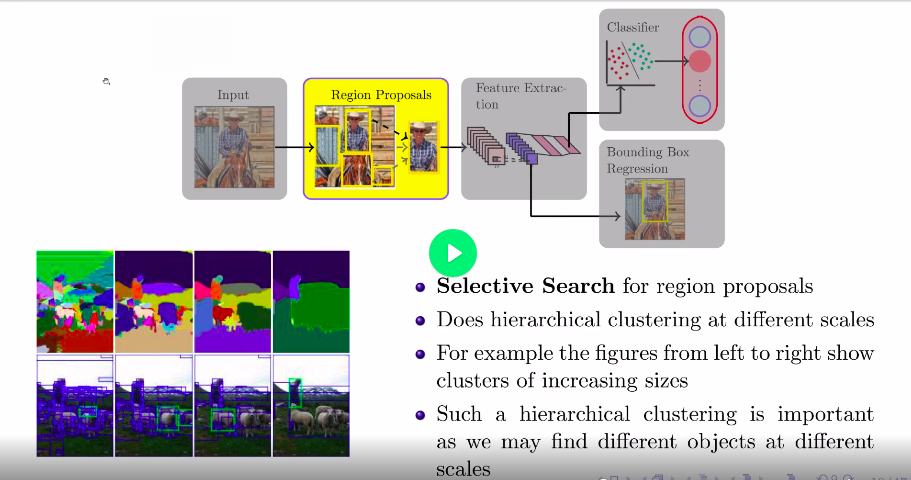

Object Detection

To build a model that can detect and localize specific objects in images.

Learning both regression and classification together.

Regression: 4 bounding box co-ordinates Classification: Object Label

Bounding box

-

Ground Truth Bounding Box: 4-dimensional array representing the rectangle which surrounds the ground truth object in the image (related to the dataset) -

Anchor Box: 4-dimensional array representing the rectangular patch of the input image the model looks at, to figure out which objects it contains (related to the model) -

Anchor Box Activations or Predicted Bounding Box: 4-dimensional array which should be as close as possible to the Ground Truth Bounding Box. I found the difference between Anchor Box and its Activations extremely confusing. In fact, they are the same thing. The Activations are nothing else that an updated version of the Anchor Box as the training phase proceeds. We keep them separated just because (in this specific implementation of SSD) we apply some position/size constraints to the Activations, based on the position/size of the original Anchor. The job of the Anchor Box is to fit the Ground Truth Bounding Box (and its class) as well as possible. Nothing else.

Reference:

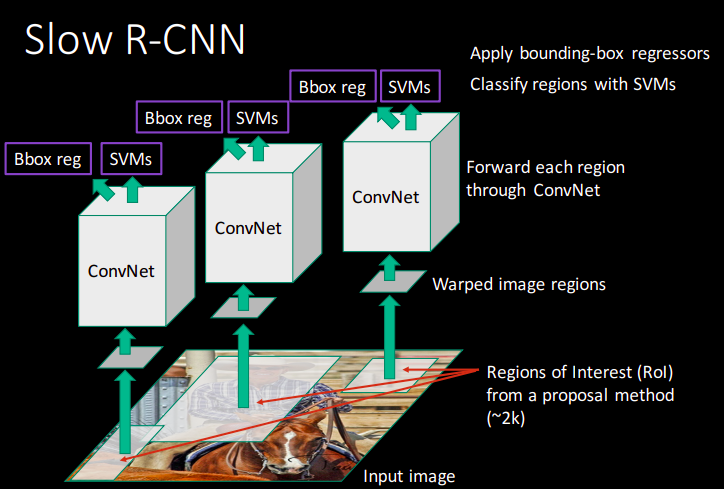

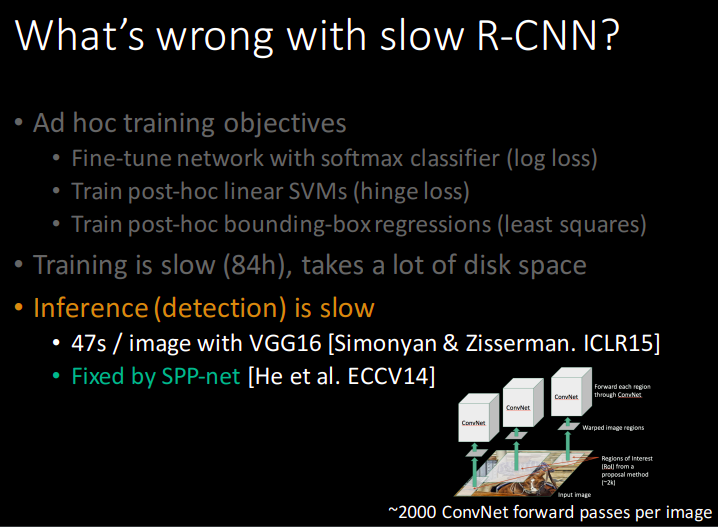

What is RCNN?

RCNN: Region Based CNN for Object Detection

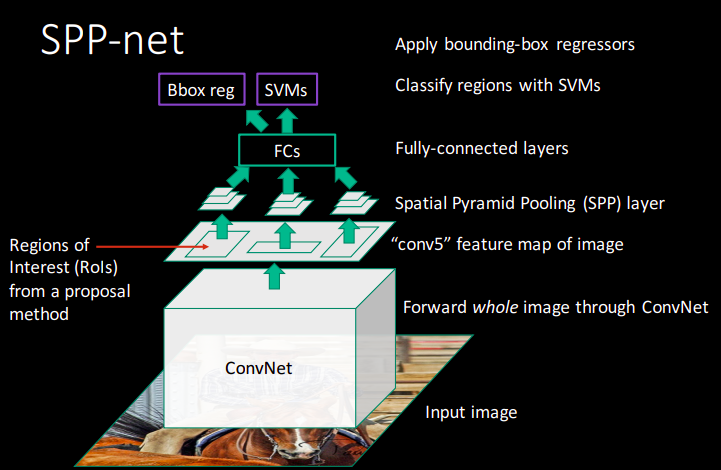

What is SPP-net?

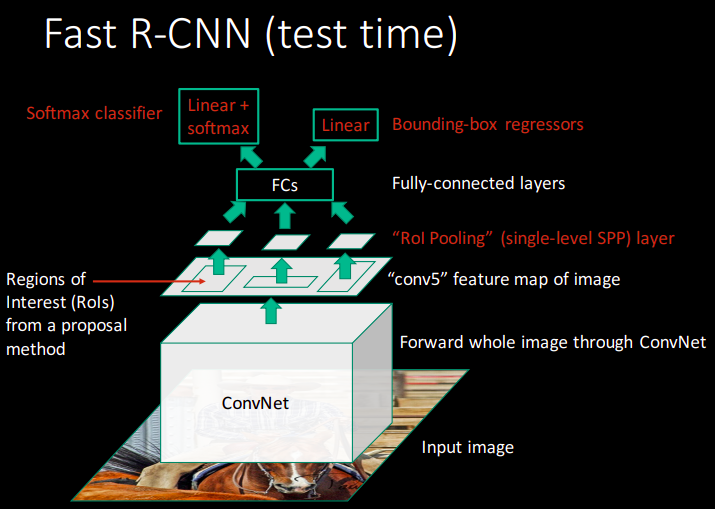

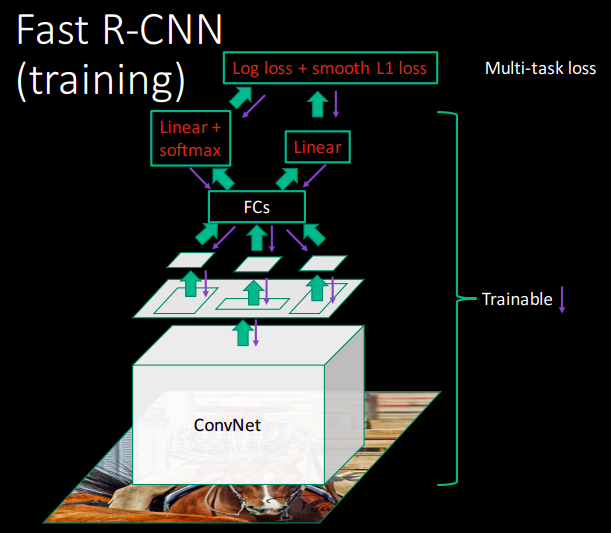

What is Fast-RCNN?

- Fast test-time line SPP-net

- One network, trained in one stage

- Higher mean average precision than slow RCNN and SPP-net

Reference:

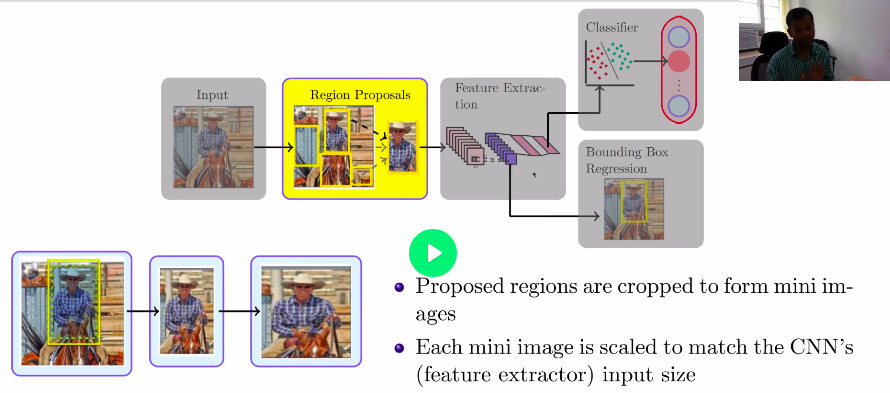

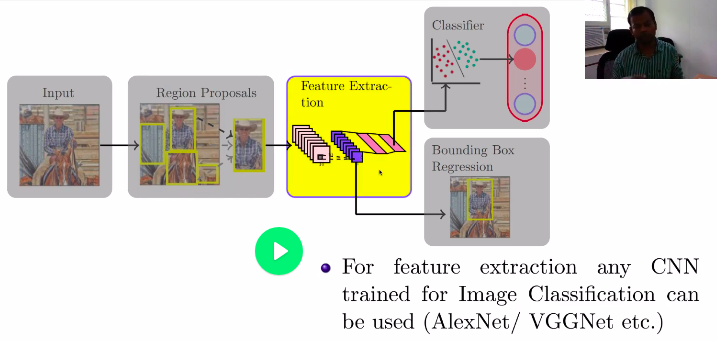

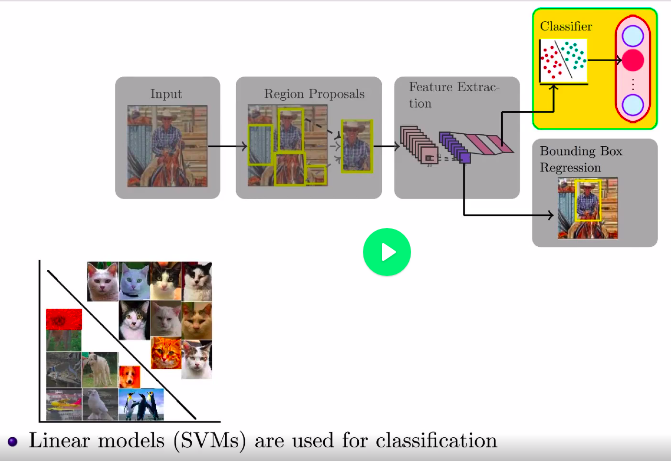

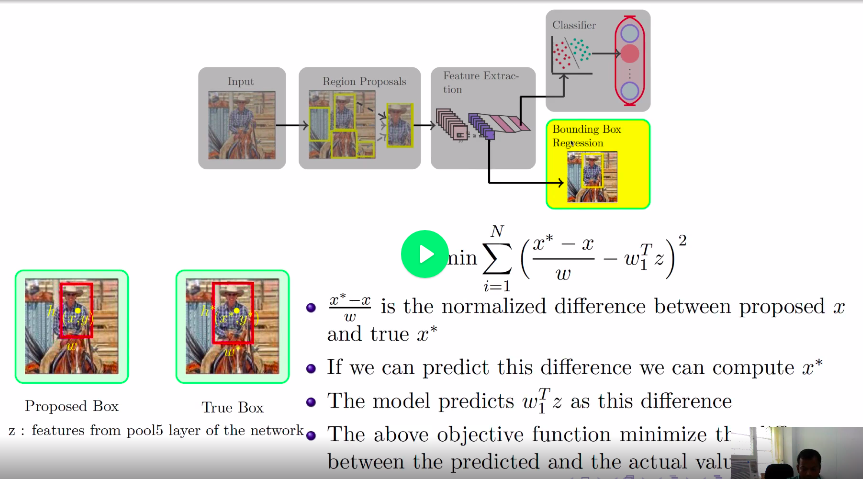

Object detection with RCNN

Steps:

Region Proposal

Feature Extraction

Classification

Regression

Similarly e calculate for $y$ coordinate.

Reference:

- Padhai, Prof. Mikesh, Lecture: Object Detection

List of Object Detection Algorithms and Repositories

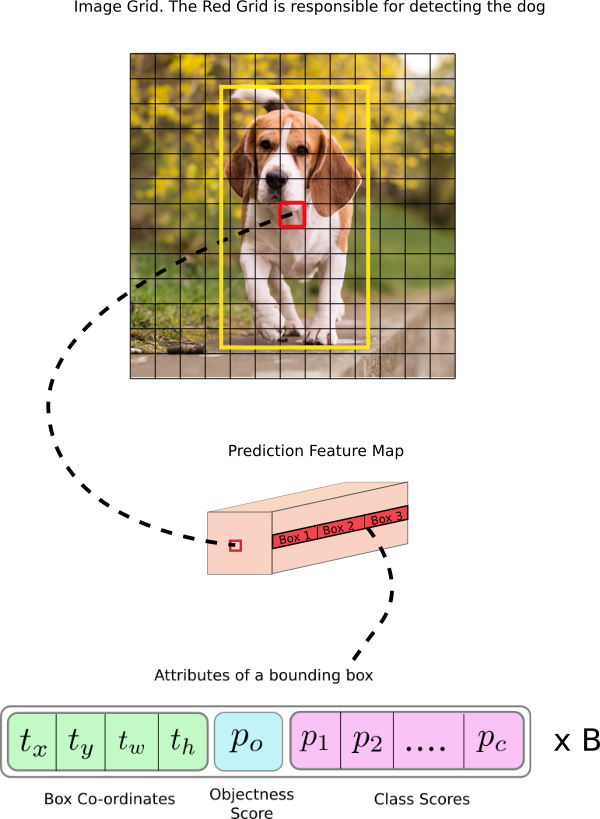

What is YOLO?

YOLO: You Only Look Once

Reference

Exercise:

- Face Recognition

- Single Shot Detection (SSD)